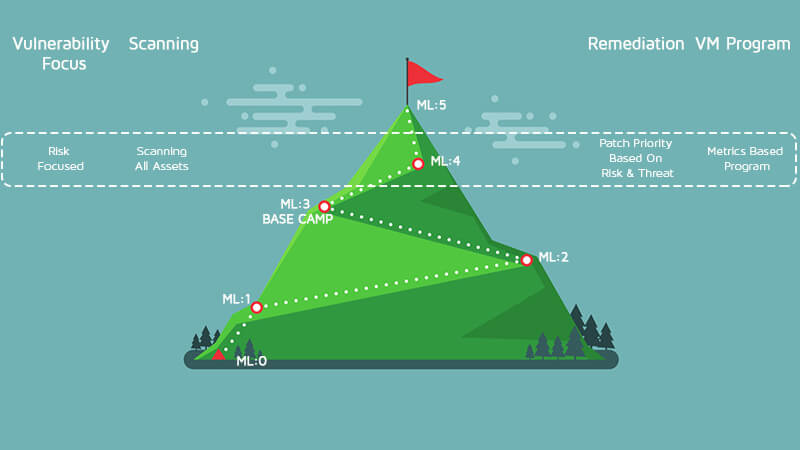

The climb is getting steeper, but thanks to hard work, vision and insight are much keener. At ML:4, all assets are scanned by a combination of agent and remote scans on a normal cadence. This will generate a lot of data dictated by threat and patch priority. Thousands of new vulnerabilities are released each year, and no company or product can detect all of them. Organizations must prioritize their coverage of vulnerabilities that they determine will have the biggest impact. Inputs such as risk, exploit availability, importance of the application or product to the organization and customers are required to determine the prioritization. Remediating every vulnerability in an enterprise environment is nearly impossible. Prioritizing based on risk is the most effective process to get the best return on investment from this costly process. There is no definitive industry method for categorizing risk, and many SEIM and reporting platforms try different approaches. Security risk is the combination of many factors such as but not limited to the following::

- Vulnerability Score – The vulnerability score should come from the vulnerability assessment tool. It provides the base of your risk score. It must be more granular than Critical, High, Medium, and Low. CVSS scores will work if the temporal and environmental scores are used, but the same score tends to be repeated in many instances, making it harder to differentiate results. Nothing beats a granular unbounded score.

- Exploit Availability – Exploit availability is not generally something that most organizations track, but there are vendors that can supply this information. Less than 5% of new vulnerabilities each year have a public exploit, but each exploit could have multiple variants. The fact that there is a public exploit should greatly increase the risk of vulnerability. Seeing multiple variants of an exploit should continue to multiply the risk. The vulnerability score from the VM product should have ease of exploit calculated into it so that easy-to-exploit vulnerabilities already have a higher base score.

- Exploit usage – This data point is also generally supplied by another vendor, or it might be included with some VM tools. This information tracks the popularity of the exploit e.g. is it being added to exploit kits, malware, or ransomware? Is the exploit traffic being picked up by network sensors in attacks? The higher the usage, the higher the likelihood it will impact the organization.

- Importance of the asset – Importance of the asset will be very organization-specific, and details of this were covered previously.

- Mitigations in place – The only true way to remove the threat of a vulnerability is to remove the vulnerability. This is not always possible due to resource constraints, business needs, or timing. In this case, mitigations may be implemented to help protect the environment or asset. Mitigations could be anything from firewall rules to disabling services. While a mitigation is only a band-aid for the real issue, it does reduce the overall risk.

Monitoring these data points and using them to prioritize work will give a valid risk assessment from which to start work in earnest at ML:4. The more sources there are, the more granular the risk assessment can be, but start with a few and build up. Fixing the vulnerabilities is more important than creating the best risk algorithm possible. At the previous maturity level, data points such as Percentage of Coverage, Truly Critical Vulnerabilities, Mean Time to Resolution and Time to Detect were measured. At ML:4, it is now time to turn them into metrics that are tracked monthly (at least) with any metrics that fail being analyzed for the root cause.

- Coverage should always be very high. If not, are systems not being assessed for some reason? Are new systems coming online without proper assessments? If DevOps practices are prevalent at the organization, the use of short-lived images or containers can skew this metric if the assessment tools are not tied into the CI/CD pipeline.

- Truly Critical Vulnerabilities will now be determined using the risk profile discussed above. The metrics should track these separately (count in the environment, and MTR).

- Mean Time to Resolution (MTR) is going to fluctuate because some fixes are harder to apply than others, and there will be times when it is not practical to make changes for business reasons. Plan for these times and also plan around when vendors release patches. (Microsoft releases every 2nd Tuesday, Cisco releases quarterly, Oracle twice a year, etc.)

- Time to Detect should be close to zero if new systems, CI/CD pipelines, and upgrades are being monitored.

Making the move to ML:4 is not easy, and it will take trial and error to find the correct risk profile and metrics for an organization. The best practice is to define something and track it for 3-6 months then tweak it as needed. It could take multiple iterations to find the correct fit for the organization. But ML:4 is worth the climb.

FURTHER READING ON CLIMBING THE VULNERABILITY MANAGEMENT MOUNTAIN:

- Climbing the Vulnerability Management Mountain

- Climbing the Vulnerability Management Mountain: Gearing Up and Taking Step One

- Climbing the Vulnerability Management Mountain: Taking the First Steps Towards Enlightenment

- Climbing the Vulnerability Management Mountain: Reaching Maturity Level 1

- Climbing the Vulnerability Management Mountain: Reaching Maturity Level 2

- Climbing the Vulnerability Management Mountain: Reaching Maturity Level 3

Meet Fortra™ Your Cybersecurity Ally™

Fortra is creating a simpler, stronger, and more straightforward future for cybersecurity by offering a portfolio of integrated and scalable solutions. Learn more about how Fortra’s portfolio of solutions can benefit your business.