Recently, I looked at Microsoft’s assigned CVSS v3.1 scores for Patch Tuesday vulnerabilities alongside the Microsoft assigned severity ratings. I wanted to revisit these numbers and see just how closely CVSS aligns with Microsoft’s opinion of severity.

Disclaimer: I’m aware that CVSS v4.0 exists. However, Microsoft has not yet adopted it, and I wanted an apples-to-apples comparison.

What Is CVSS v3.1?

CVSS v3.1 provides the Qualitative Severity Rating Scale, which looks like this:

Rating | CVSS Score |

| None | 0.0 |

| Low | 0.1 – 3.9 |

| Medium | 4.0 – 6.9 |

| High | 7.0 – 8.9 |

| Critical | 9.0 – 10.0 |

Source: FIRST.org

Microsoft, on the other hand, provides ratings with descriptions which look like this:

Rating | Description |

| Critical | A vulnerability whose exploitation could allow code execution without user interaction. These scenarios include self-propagating malware (e.g.,, network worms) or unavoidable common-use scenarios where code execution occurs without warnings or prompts. This could mean browsing a web page or opening an email. |

| Important | A vulnerability whose exploitation could result in compromise of the confidentiality, integrity, or availability of user data or of the integrity or availability of processing resources. These scenarios include common-use scenarios where the client is compromised with warnings or prompts regardless of the prompt's provenance, quality, or usability. Sequences of user actions that do not generate prompts or warnings are also covered. |

| Moderate | The impact of the vulnerability is mitigated to a significant degree by factors such as authentication requirements or applicability only to non-default configurations. |

| Low | The impact of the vulnerability is comprehensively mitigated by the characteristics of the affected component. Microsoft recommends that customers evaluate whether to apply the security update to the affected systems. |

Source: Microsoft

Comparing CVSS and Microsoft Ratings

The easiest thing to do would be to map these one-to-one with the identically named Critical and Low bookending the list. I wanted to take this a little bit further and attempt to score the Microsoft descriptions, to see if I could come up with a range. The idea here is that the generated score would be the lower end of the severity range.

The lesser side of Microsoft’s Critical rating would be a browser or email vulnerability. Rather than score this one myself, I decided to look at the CVSS v3.1 examples. The Google Chrome Sandbox Bypass vulnerability sounded like Microsoft’s version of Critical to me and it has a score of 9.6.

Moving on to Important, I decided that the closest example was the Adobe Acrobat Buffer Overflow Vulnerability, which has a score of 7.8.

For Moderate, I liked the example from the Apache Tomcat XML Parser Vulnerability, with its score of 4.2. Of course, Low is going to be anything below that.

Ultimately, our table ends up looking like this and it isn’t that far off from CVSS, but I do note a few differences.

Rating | Score |

| Critical | 9.6 – 10.0 |

| Important | 7.8 – 9.5 |

| Moderate | 4.2 – 7.7 |

| Low | 0.0 – 4.1 |

The point, however, of this write-up is to explore the true relationship between Microsoft severity and CVSS score, and we have plenty of data points to use. Specifically, using the Microsoft Security Response Center (MSRC) API, I pulled all vulnerability data from 2017 until the end of 2024, so let’s dig in and see what we’re looking at.

There was a total of 5,926 vulnerabilities in the data that I pulled, which is a lot of CVSS scores to review. So, I decided to go back to high school math for a few minutes and look at the mean, median, and mode.

Mean CVSS Score: 7.0

Median CVSS Score: 7.5

Mode CVSS Score: 7.8 (with 1,623 occurrences)

I was a little surprised by how often a score of 7.8 occurs. With 1,623 vulnerabilities out of 5,926 total vulnerabilities, a score of 7.8 represents 27.4% of all assigned scores, which seems high. If more than 1 in 4 vulnerabilities are scored the same, that makes this a difficult metric to use for prioritization. Where I was really surprised, was when I broke down the data further and looked based on Microsoft severity level.

Critical (735 vulnerabilities)

Mean CVSS Score: 7.3

Median CVSS Score: 7.8

Mode CVSS Score: 4.2 (with 167 occurrences)

Important (4,783 vulnerabilities)

Mean CVSS Score: 7.0

Median CVSS Score: 7.5

Mode CVSS Score: 7.8 (with 1,544 occurrences)

Moderate (127 vulnerabilities)

Mean CVSS Score: 6.6

Median CVSS Score: 7.5

Mode CVSS Score: 7.5 (with 73 occurrences)

Low (281 vulnerabilities)

Mean CVSS Score: 4.9

Median CVSS Score: 4.2

Mode CVSS Score: 4.2 (with 167 occurrences)

The good news here is that the mean CVSS score does get lower as the severity decreases, which is what we would expect. The frequency with which a score of 7.8 occurs is no longer surprising when you consider how many vulnerabilities are classified as Important. In total, 80.7% of Microsoft’s vulnerabilities released in the past 8 years have had a severity rating of Important.

Surprising similarities

So, where did I find myself surprised? The biggest surprise was that the most frequently occurring Critical severity CVSS score was the same as the most frequently occurring Low CVSS score and, oddly, they both had 167 occurrences. I’m surprised that there are that many vulnerabilities that Microsoft considered Critical that fell at the lowest end of what CVSS considers to be Medium. From a prioritization standpoint, that is an absolute nightmare to reconcile. I decided to dig a little deeper and something must have changed at Microsoft, because the last Critical assigned a CVSS score of 4.2 happened in 2021 and it was the only one that year. The other 166 occurrences happened between 2017 and 2020.

Also, interesting to me was that the Critical median was the Important mode and both the Important and Moderate severity levels shared the same median (7.5), which also happened to be the mode for Moderate. These numbers were more similar than I expected them to be.

Then, I started to wonder if Microsoft paid more attention to exploited vulnerabilities and if we’d see something different looking at just those. Across the 8 years, 124 vulnerabilities patched by Microsoft were labeled as exploited.

Mean CVSS Score: 7.5

Median CVSS Score: 7.8

Mode CVSS Score: 7.8 (with 47 occurrences)

Overall, not much change. The mean climbed to match the total vulnerability median, while the median and mode matched the total vulnerability mode. What if we break it down by severity?

Critical (19 vulnerabilities)

Mean CVSS Score: 8.3

Median CVSS Score: 7.8

Mode CVSS Score: 7.8 (with 5 occurrences)

Important (91 vulnerabilities)

Mean CVSS Score: 7.4

Median CVSS Score: 7.8

Mode CVSS Score: 7.8 (with 42 occurrences)

Moderate (13 vulnerabilities)

Mean CVSS Score: 6.8

Median CVSS Score: 7.5

Mode CVSS Score: 7.5 (with 6 occurrences)

Low (1 vulnerability)

Mean CVSS Score: 4.3

Median CVSS Score: 4.3

Mode CVSS Score: 4.3 (with 1 occurrence)

Again, not much overall change. Our Critical severity vulnerabilities are looking a little better with a mean of 8.3, but that’s still lower than I would expect it to be given CVSS’s range for Critical.

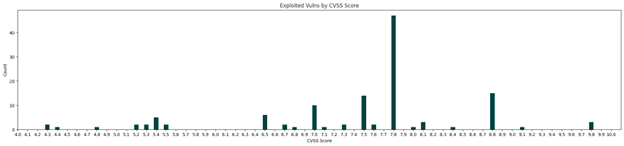

Exploited vulnerabilities mapped against their CVSS Scores

Where things become more interesting is when you start to look at exploited vulnerabilities mapped against their CVSS Scores.

IMAGE

A lot of the vulnerabilities, which were actively exploited, rank around the middle of the graph, instead of clustering toward the right, which is what I would expect. If we return to the idea of prioritization, the CISA Known Exploited Vulnerabilities Catalog (KEV) then adds another interesting angle to the conversation. As the KEV states, “Organizations should use the KEV catalog as an input into their vulnerability management prioritization framework.”

We have exploited vulnerabilities across all Microsoft severity levels and the bulk of the CVSS scoring range, which is why the KEV states that it should be “an input” into your “prioritization framework” and doesn’t state to use it for prioritization alone. It’s also a great reason why standards that require patching based on CVSS are out of touch with the importance of proper prioritization. There are a lot of levers that you can pull when working to prioritize vulnerabilities, so make sure that you’re utilizing them and not just relying on a single source of prioritization.

At this end of the day, I wanted to look at the numbers and I’m not sure they really tell a story. I do believe, however, that they disprove the idea that CVSS is a valid prioritization metric on its own. They were also interesting on their own, so I hope you enjoyed this walk through the numbers with me.