It seems like everyday you see a new report about a massive data leak caused by someone accidentally exposing files stored in AWS S3 Buckets to everyone on the Internet. Many may remember Verizon’s infamous snafu that leaked data records for six million of their customers due to a misconfiguration in their S3 buckets. Since then, there have also been a host of other S3 storage breaches in the news:

Amazon has put a lot of effort into making S3 access configuration as flexible as possible, but in doing so, they also made it easy to inadvertently expose your buckets and the objects inside them. In fact, there are so many ways you could potentially expose your buckets that in trying to manually evaluate your configuration settings can become a giant headache, a process which is itself prone to human error. It’s no wonder these S3 storage breaches are becoming commonplace. I wanted to take a moment to dive into this a little bit to explain how these misconfigurations occur, how you can try to manually check your own AWS S3 storage to ensure you aren't making the same mistakes, and finally how you can use the Tripwire Enterprise Cloud Management Assessor to automatically assess your S3 Storage for any files that may be inadvertently exposed. If your interests for securing AWS expand beyond S3 storage, I would recommend checking out the following post by Ben Layer: "Securing AWS Management Configurations By Combating 6 Common Threats."

WHAT IS AWS S3?

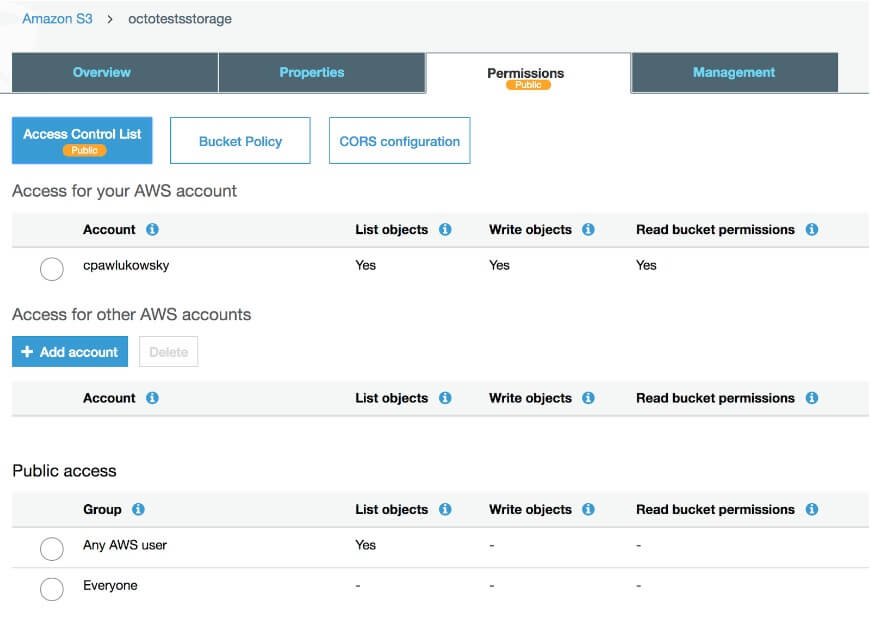

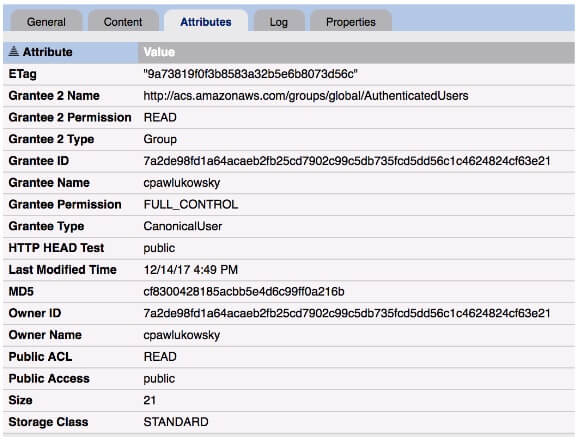

AWS S3, otherwise known as the Simple Storage Service, lets you store arbitrary objects inside of buckets. URLs can be used to retrieve those objects assuming you have the appropriate permissions do so. For example, you could store a simple Javascript file in an S3 bucket that you expose for anonymous read access, which you can then include in a script tag from a public static webpage using a URL like https://s3-us-west-2.amazonaws.com/mypublicbucket/my_public_file.js There are two primary methods for managing external access to your S3 objects. The first uses what is known in AWS S3 nomenclature as ACLs, which is by far the more basic of the two methods I’ll discuss here. Through ACLs, you can grant basic read/write permissions to other AWS accounts or predefined S3 groups. If you’re granting access to AWS accounts, you of course want to be sure to audit those accounts and their levels of access to ensure the principle of least privilege is being adhered to. For the purposes of this discussion though, what we’re most concerned with is making sure we haven’t exposed our objects to anonymous access. To that end, you want to make sure you haven’t granted read and/or write permissions to either the “AuthenticatedUsers” or “AllUsers” predefined global groups. Any read and/or write permission to these groups in an ACL should immediately raise a red flag. Granting either of these predefined groups permissions to your objects should only be done when absolutely necessary, and you should always place a high priority on evaluating any change in access that affects these groups. While it may be obvious why you should be careful with granting access to a group called “AllUsers,” you may have raised an eyebrow at “AuthenticatedUsers.” Why would you need to be concerned about that? Because it’s any authenticated AWS users, not just those associated with your own account. Anyone could go and register a new AWS account and use those credentials to make requests to your files as an authenticated AWS user if your files have been granted permissions to this group. For all intents and purposes, it may as well be anonymous read access only with an added false sense of security if you don’t understand just how many users you would be exposing your buckets and objects to. So how can you manually tell if you’ve exposed S3 objects via an ACL? Clicking through the AWS console is one way. If you navigate to an S3 bucket or object in the AWS console and click the permissions tab, you can determine whether or not an object has been exposed by looking for permissions for the “Everyone” or “Any AWS User” groups under the Public Access section. Amazon will also usually helpfully place yellow “Public” markers in the UI if it has detected that you’ve exposed a bucket or file publicly. That big yellow “Public” marker should be your red flag to go assess those Buckets and/or Objects.

Alternatively, you can use the AWS CLI tool to list out your buckets and objects and to find any ACLs you have applied to them using the following commands

aws s3api list-buckets aws s3api get-bucket-acl --bucket bucket-name aws s3api list-objects --bucket bucket-name aws s3api get-object-acl --bucket bucket-name --key file-name

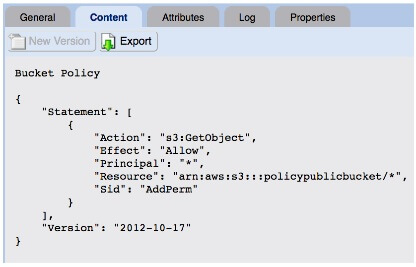

The second method for managing access to your S3 objects is using Bucket or IAM User Policies. It’s far more complicated than using ACLs, and surprise, offers you yet more flexibility. Bucket policy and user policy are access policy options for granting permissions to S3 resources using a JSON-based access policy language. In policies, you can grant access to resources to specific actions for specific principals. Resources will be your buckets and objects. Actions will be the set of operations permitted on those resources. Principals will be the accounts or users who are allowed access to those actions and resources. Where things start getting really complicated is that you can provide wildcards for your resources, actions and principals. An example S3 Bucket policy that would expose all of the files in your bucket is the following:

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"AddPerm",

"Effect":"Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":["arn:aws:s3:::examplebucket/*"]

}

]

}

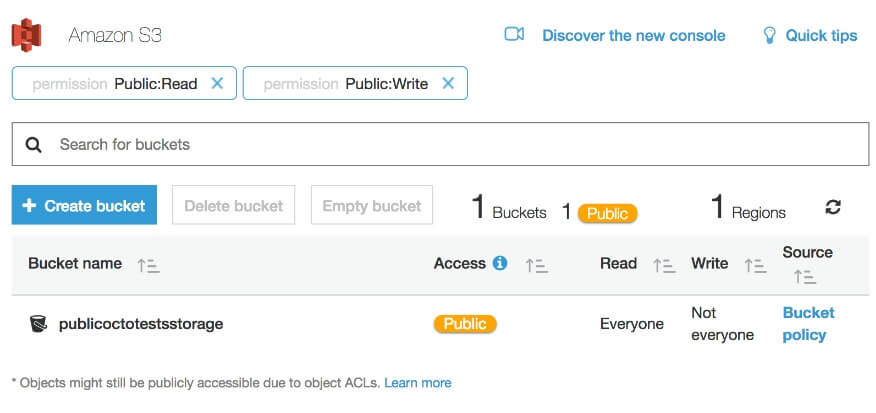

Now hold on to your butts because these policies are about to get a lot more complicated. Your policy can contain multiple statements, some with effects of “Deny” to deny actions, and others with effects to “Allow” actions. You can also provide a set of conditions for these statements to further restrict access. So while you may have a wildcard principal of “*”, you may further restrict principals by supplying a “Condition” or two. You could limit access to a set of IP addresses, to requests with only a certain supplied referrer, or to AWS userid’s with wildcard strings, among other things. On top of all that, Amazon also has a concept of “Variables” that you can use in your policies that will expand dynamically into different values. For example, you could use the variable “{aws:username}” in your Resource field that would expand into the friendly name of the current user. Sounding complicated? We’re not done yet. Instead of specifying “Principals” or “Actions” in your policy statements, you could instead provide “NotPrincipals,” “NotActions,” and “NotResources” that grant the effect to the exact opposite of whatever is specified inside of them. Are you using these? If so, why do you do this to yourselves? If it sounds like it’s going to be hard to manually assess your policies, that’s because it probably is. You can make this easier on yourself by keeping your policies as simple as possible. It may be enticing to apply all kinds of fancy policy logic to your buckets and objects, but that kind of complexity will really increase the chance of unintended exposures. The AWS S3 Console will again show you a very-visible warning about any buckets that are exposed via your Bucket Policies, and it will even let you filter to only show those buckets that are it deems “Public” via either your ACLs or Bucket Policies:

Using the AWS CLI tool, you can find the Policy settings of your buckets using the following commands:

aws s3api get-bucket-policy --bucket bucket-name

With all these permutations of policies and ACLs, it starts getting very complicated to determine whether or not a file is actually exposed on the Internet when it shouldn’t be. In fact, I might suggest that your policies could be so complicated that probing your files directly by sending HEAD requests to your bucket and object URLs may be a prudent step on top of your manual assessment to any changes in Policies and ACLs. Probing with HEAD requests would be a fairly definitive test for checking that you haven’t exposed your objects to the masses. If you get a 200 response to your HEAD request, you can be sure your file is exposed.

# curl -I http://mybucket.s3.amazonaws.com/public.txt HTTP/1.1 200 OK x-amz-id-2: 74p+ISJgFK+OBr0X0hNT14+jTRMjerF6v8FSPM/EKvfweLFv8dqLa20MSvFPPHSxGf+ppDVdH5Y= x-amz-request-id: 42387922120B2B55 Date: Mon, 11 Dec 2017 22:33:45 GMT Last-Modified: Mon, 11 Dec 2017 18:46:06 GMT ETag: "0b26e313ed4a7ca6904b0e9369e5b957" Accept-Ranges: bytes Content-Type: text/plain Content-Length: 19 Server: AmazonS3 # curl -I http://mybucket.s3.amazonaws.com/private.txt HTTP/1.1 403 Forbidden x-amz-request-id: CF33CE1511EF1811 x-amz-id-2: y1mBWlGZllczQ77p3XuIm0a0G+nVc7pPTxq63mMAX5gLLExbddtS80arxMKyrWSi9czlKFdKBc0= Content-Type: application/xml Transfer-Encoding: chunked Date: Mon, 11 Dec 2017 22:34:26 GMT Server: AmazonS3

Of course, these are unauthenticated requests, and your files may instead be exposed to all authenticated AWS users via the “Any AWS user” group. I should reiterate that this group includes any authenticated AWS users in the world, not just those users associated with your own AWS account. You could instead use a library like python-requests-aws for making authenticated HEAD requests to your S3 buckets and objects to identify if they’re exposed. You can find python-requests-aws here. Using this library, you could use code like the following to make an authenticated HEAD request to an object in one of your buckets.

url = ‘http://mybucket.s3.amazonaws.com/public.txt’ print requests.head(url, auth=S3Auth(ACCESS_KEY, SECRET_KEY)).ok

How Tripwire Can Help

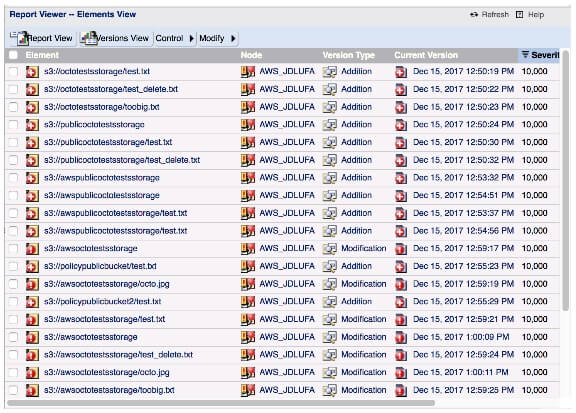

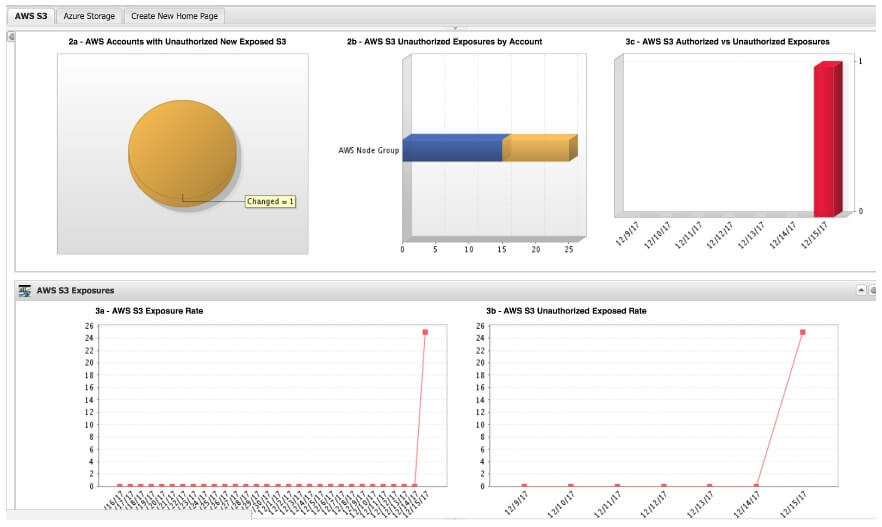

Using the Tripwire Enterprise Cloud Management Assessor, we can automatically assess your AWS S3 Buckets and Objects to determine if they are exposed for anonymous access and to report on objects that have become newly exposed. It can help you with your Microsoft Azure Storage, too, but I’ll get into that in a different post. The Cloud Management Assessor will scan each of the buckets and objects you have stored in S3 to retrieve metadata, file contents, ACL, and Policy information as well as track all of that for change.

You can then view the S3 Storage dashboard to see charts visualizing new buckets and objects that have become exposed and drill into the details of all your exposed files. Next, you can use Tripwire Enterprise’s approval system to promote any changes to the new baseline effectively, marking intentionally exposed files as approved.

How does it determine if files are exposed? By applying multiple layers of analysis. First, it evaluates the ACLs and Policies applied to Buckets and Objects to make a determination as to whether or not a bucket or object is exposed to the public. As an added layer of analysis on top of that, the Cloud Management Assessor can also make HEAD requests to each of the objects and buckets in your storage to provide a more definitive test to ensure your files have not been exposed. You can even provide a separate AWS access key and secret key so that the Cloud Management Assessor can make authenticated HEAD requests as a part of this live probing to further ensure that your objects and buckets may not be exposed to all authenticated AWS users. If you want to know more about the Cloud Management Assessor, feel free to check out the datasheet on it here. I know I covered a lot here, but if there’s one thing to take away from this, it’s that AWS S3 permissions are very complicated. As with any complex permission system, it’s easy to make mistakes and unintentionally expose your buckets and objects. You should be taking a two-pronged approach to ensure you won’t have to deal with your own S3 storage breach. First, you must manually evaluate on a continual basis all of the ACLs and Policies that affect the access to your S3 storage to ensure you’re adhering to the principle of least privilege. And second, you should most certainly be using a tool like the Tripwire Enterprise Cloud Management Assessor to help continuously assess access to your S3 storage in an automated way to ensure you haven’t exposed anything unintentionally to the public.

Mastering Security Configuration Management

Master Security Configuration Management with Tripwire's guide on best practices. This resource explores SCM's role in modern cybersecurity, reducing the attack surface, and achieving compliance with regulations. Gain practical insights for using SCM effectively in various environments.